why vtuber lip-sync doesn't work on M1 processors

So you want to be a VTuber, but you use macOS. Maybe you've tried using VTube Studio or 3tene11. Read as mi-te-ne in Japanese, roughly meaning "look!" , excited to see that they both support voice based lip-syncing, only to find out the options seem to be missing or disabled for machines using M1 processors. Why is this?

what is voice based lip-syncing?

Lip-syncing is the process of moving or changing the mouth shapes on a model in response to the vocal sounds from some audio, usually from a microphone. For example, if you speak the ah sound, you expect the mouth on your VTuber model to open wide. Lip-syncing is desirable because it adds an extra dimension of expression to VTuber models, and also makes it easier to understand what someone is saying.

The difficulty in integrating lip-syncing with VTubing tools is that the sync must not only be accurate, but it must also happen in real-time. This means that the mouth shape changes as soon as audio comes in. If the delay is too long, then the effect will look unnatural. There are many existing tools for lip-syncing from prerecorded audio (such as Rhubarb), but few for real-time audio.

how lip-syncing is implemented for VTube Studio and 3tene

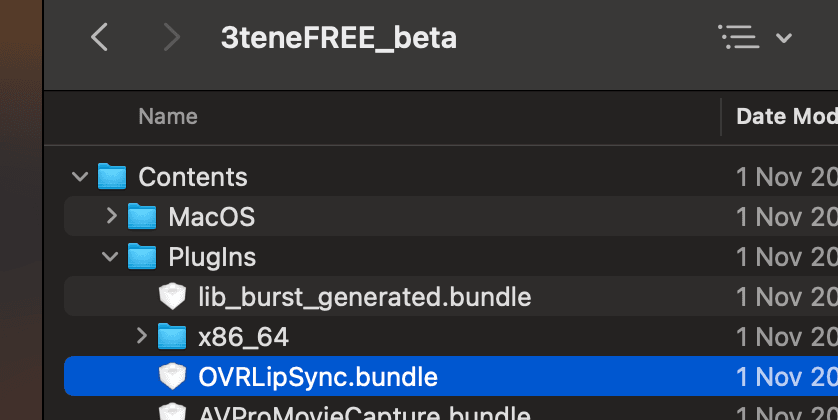

We can examine the application bundles for both tools (right click then Show Package Contents) to

see what libraries they are using. In both cases, they have a file named OVRLipSync.bundle:

OVRLipSync.bundle comes from the

Oculus Native

distribution of the Oculus Audio SDK,

a library used for real-time voice based lip-syncing. The official documentation seems to

be missing but the included sample code in the download suggests that it is

relatively straightforward to use.

If we dig a little deeper, we can find a thread on the Unity issue tracker

explaining why OVRLipSync.bundle fails on M1 processors:

OVRLipSync.bundle uses AVX instructions that are not supported by Rosetta 2. It's not Unity that's crashing - it's the Oculus' plugin.

So OVRLipSync.bundle uses processor instructions that can't be emulated on M1 hardware.

Rosetta 2 and the Intel AVX extensions

Rosetta 2 is a component of macOS that transforms Intel x86 instructions into arm64 instructions that the M1 processor can understand. It is essential for allowing software that has not yet been compiled for Apple Silicon to run on M1 processors (in this case, Oculus Native).

The term AVX (Advanced Vector Extensions) refers to a family of extensions to the x86 instruction set developed by Intel. These extensions contain instructions that allow x86 processors to perform operations on multiple pieces of data at the same time, effectively increasing the speed at which data can be processed. So if Rosetta 2 can emulate the rest of the x86 instruction set, why can't it emulate AVX instructions?

A comment on Hacker News suggests an answer. Intel has patented the behaviour of the AVX instructions preventing Apple from legally emulating them.22. If the AVX instructions are patented, then why can AMD processors run them? AMD has a royalty-free license to use any Intel patent after an antitrust lawsuit.

possible workarounds and the future

There are mainly three paths forward for those who want to use lip-syncing with VTube Studio or 3tene:

- Wait for Apple to add AVX support to Rosetta 2

- Wait for Oculus to compile a new distribution of the Oculus Audio SDK that does not use the AVX instruction set

- Wait for VTube Studio or 3tene to use or implement a different lip-syncing library

There is a lesser known VTubing tool named Webcam Motion Capture,

which is also compatible with macOS, that uses uLipSync instead of Oculus Native

(as mentioned in Webcam Motion Capture's included readme.txt). However, uLipSync is primarily designed

for manipulating 3D Unity models which makes it unlikely to be suitable for use in

VTube Studio (which uses Live2D models).

There is another option for those using VTube Studio. VTube Studio exposes an API which allows external software to set the parameter values of the Live2D model. If you are technically inclined, you can write your own lip-syncing plugin.

For now, we will have to wait to see what the future of VTubing on macOS holds.

footnotes

-

Read as mi-te-ne in Japanese, roughly meaning "look!" ↩

-

If the AVX instructions are patented, then why can AMD processors run them? AMD has a royalty-free license to use any Intel patent after an antitrust lawsuit. ↩